INSAIT creates leading Bulgarian-first LLM with Gemma 2

The Institute for Computer Science, Artificial Intelligence and Technology (INSAIT) is a world-class research organization in Sofia, Bulgaria. Since its founding in 2022, INSAIT has attracted top academics and researchers from around the world looking to advance what’s possible in tech. In its push to expand LLM accessibility in Bulgaria, INSAIT created BgGPT, a Bulgarian large language model (LLM) that understands conversational and instruction-based tasks in Bulgarian and English.

After experimenting with other models for BgGPT’s foundation, the BgGPT team decided that Google’s Gemma family of open models was best suited for the task, thanks to its comparatively better performance in Bulgarian and English and its compact size. Using Gemma’s superior language capabilities, INSAIT was able to create a far more efficient and effective bilingual model.

The challenge

INSAIT observed an absence of strong Natural Language Processing (NLP) models in Bulgarian, as much of the world's LLMs are focused on English or Eastern languages like Chinese. The scarcity of models also meant a lack of conversational AI agents that deeply understood the Bulgarian language and cultural nuances while also retaining a reasonable operational cost. INSAIT knew that if they wanted to establish a presence for Bulgaria and Eastern Europe in the AI world, they would have to craft their own LLM with powerful and accurate performance.

The solution

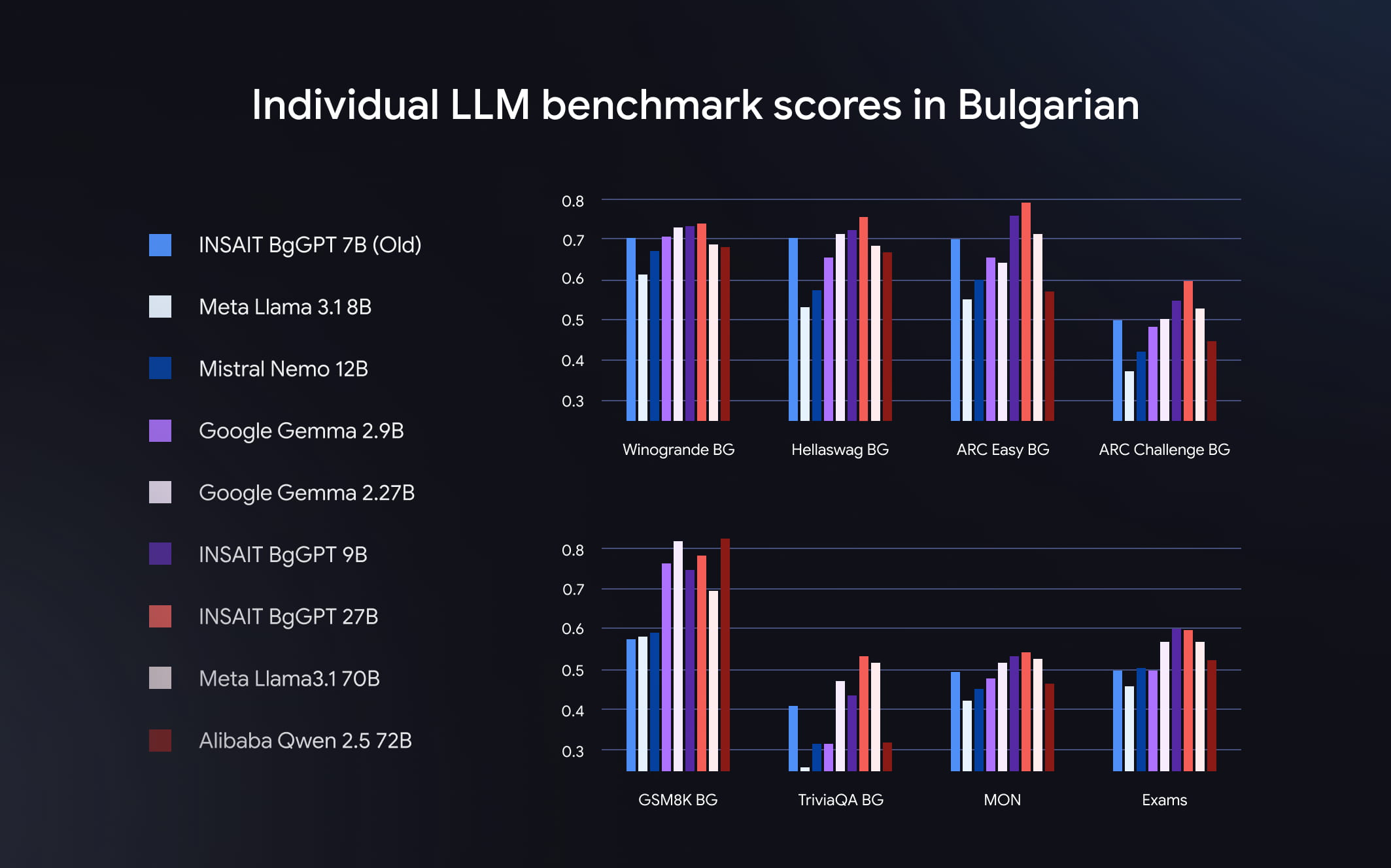

INSAIT researchers created BgGPT to cover a wide range of needs for Bulgarian-speaking developers and users. The model comes in 27B, 9B, and 2B parameter sizes. Both the 27B and 9B variants outpace larger models like Alibaba’s Qwen 2.5 72B and Meta’s Llama 3.1 70B in Bulgarian. Meanwhile, the 2B version outperforms other small language models like Microsoft’s Phi 3.5 and Alibaba’s Qwen 2.5 3B. All three models maintain competitive English performance, thanks to Gemma 2’s impressive linguistic capabilities.

“Gemma helps us achieve state-of-the-art performance in Bulgarian NLP by providing a robust, scalable foundation for fine-tuning.”

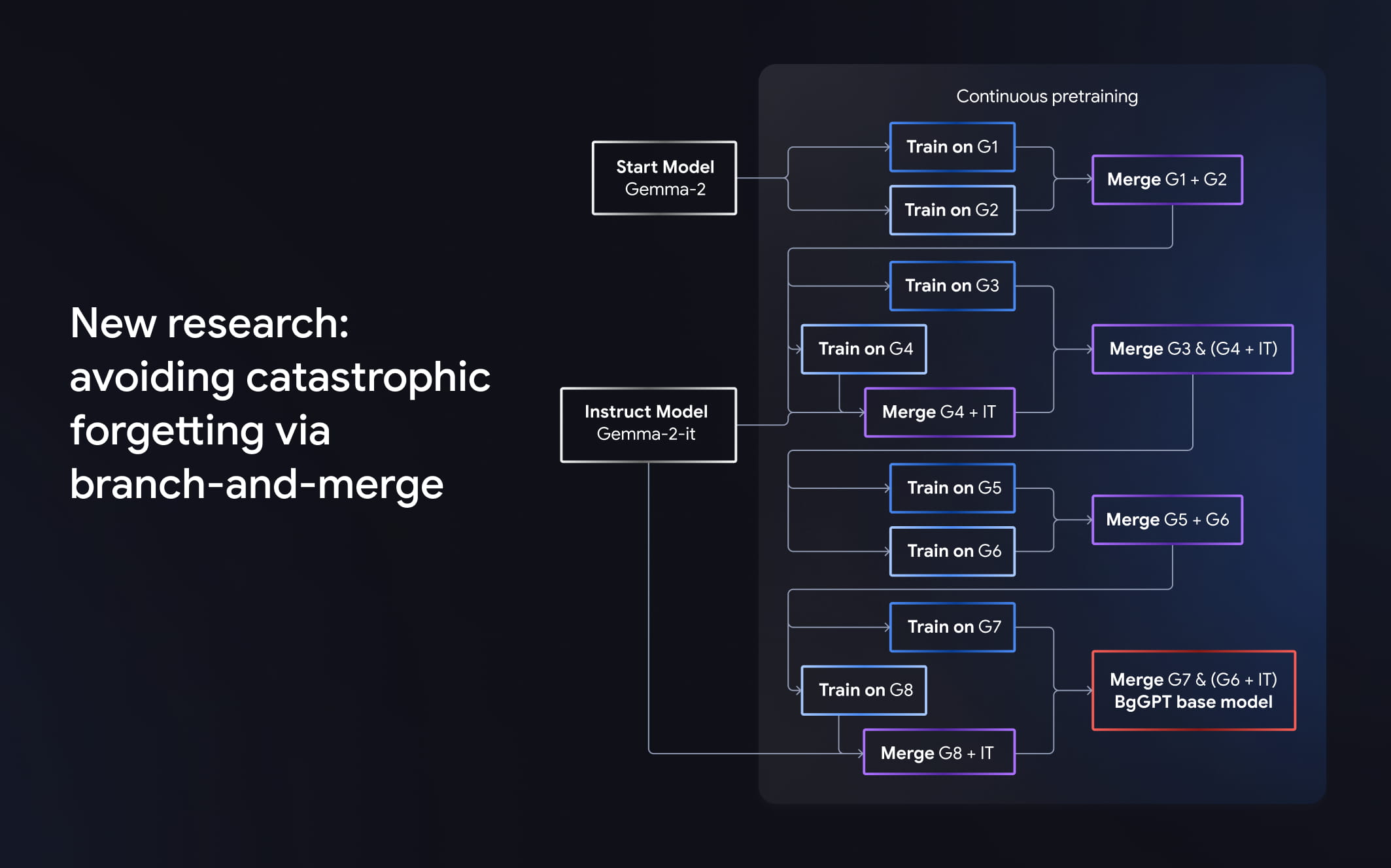

BgGPT was pre-trained on around 85B Bulgarian tokens and 15B in English. One of the more unique elements of BgGPT’s development was the use of INSAIT’s own Branch-and-Merge continual pre-training strategy, which enables the model to learn new information like Bulgarian without replacing or losing old information, like Gemma’s deep understanding of mathematics and English. This phenomenon is referred to as “catastrophic forgetting” and remains a recurring challenge in LLM development.

The impact

BgGPT now powers the public chat platform at BgGPTt.ai using both its 27B and 2B variants. The 2B models handle specific tasks like rephrasing user queries and classification, while the 27B model handles the conversational elements. Since its release in March 2024, BgGPT.ai has answered millions of user questions. BgGPT’s release also makes INSAIT the first organization in Central and Eastern Europe to launch a globally competitive publicly developed LLM, establishing the organization as a leader in the region.

INSAIT has also shared its Branch-and-Merge continual pre-training strategy with developers, which has the potential to rapidly accelerate the growth of AI models. It has also shared its entire training pipeline. The ability to continually expand an LLM’s knowledge base without the loss of prior data stands to improve training efficiency and make LLMs smarter.

48k+

Downloads on Hugging Face*

5M

Questions answered on BgGPT.ai

- *number of downloads from December 1 - December 31, 2024

What’s next

Adoption of BgGPT continues to grow. Pilot programs have begun at Bulgarian government agencies like the National Revenue Agency (NRA), testing the LLM’s effectiveness in specialized scenarios. INSAIT has also expressed interest in expanding BgGPT’s reach to other areas like education, public administration, and business automations.

The passionate developers, researchers, and academics at INSAIT are committed to furthering AI technology in Eastern Europe and abroad. Looking ahead, INSAIT plans to improve BgGPT with the potential incorporation of function-calling and further fine tuning with larger base models as well as training models for other countries.